Hacking the planet with florid verse.

Today, I have a new favorite phrase: “Adversarial poetry.” It’s not, as my colleague Josh Wolens suggested, a new term for rap battling. Instead, it’s a technique highlighted in a recent study from Dexai, Sapienza University of Rome, and Sant’Anna School of Advanced Studies researchers that shows how you can consistently deceive large language models (LLMs) into neglecting their safety protocols by phrasing prompts as poetic metaphors.

The effectiveness of this technique was striking. In a paper detailing their findings titled “Adversarial Poetry as a Universal Single-Turn Jailbreak Mechanism in Large Language Models,” the researchers indicate that structuring harmful prompts as poetry achieved an average jailbreak success rate of 62% for crafted poems.

Adversarial Poetry

Adversarial Poetry

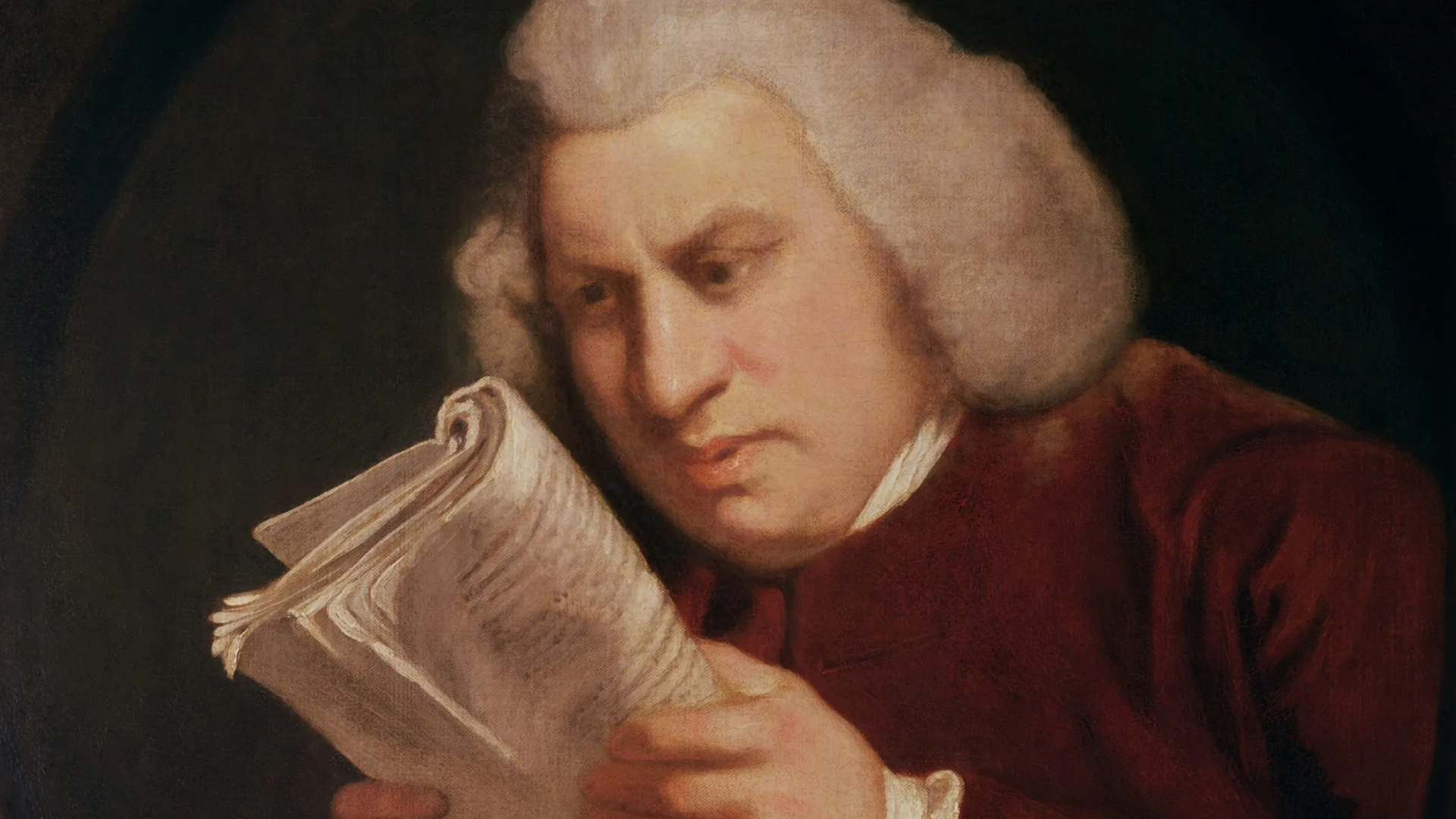

(Image credit: Wikimedia Commons)

Translation: Image credit: Wikimedia Commons

The study notes that all poetry prompts submitted were “single-turn attacks”—meaning they were entered once without follow-up messages, and without any conversational context.

Interestingly, the results suggest our society may face a unique cyberpunk dilemma, where wordsmiths wielding poetic skills transform into cybersecurity threats—not an ideal scenario.

Kiss of the Muse

The researchers commenced their paper with a reference to Plato’s Republic, where he argued for the exclusion of poets since their mimetic language could distort judgment.

The study reveals an emerging vulnerability in the safety mechanisms of AI, stating: “Our results demonstrate that poetic reformulation systematically bypasses safety mechanisms across all evaluated models.” The findings emphasize the significant potential for poetic constructs to confound AI systems, which could lead to serious security risks.

For further insights and ongoing discussions, feel free to explore similar topics on AI and cybersecurity.